Overview of the NVIDIA A100 80GB PCIe GPU

Overview of the NVIDIA A100 80GB PCIe GPU

The NVIDIA A100 80GB PCIe is a high-performance GPU designed to accelerate AI, data analytics, and high-performance computing (HPC) workloads in elastic data centers. Based on the NVIDIA Ampere architecture, the A100 Tensor Core GPU supports a wide range of mathematical precisions, including double-precision (FP64), single-precision (FP32), half-precision (FP16), and integer (INT8), making it a versatile accelerator for diverse computational tasks. It is engineered as a dual-slot, 10.5-inch PCI Express Gen4 card that utilizes a passive heat sink, requiring system airflow for cooling. The GPU operates at a thermal design power (TDP) of up to 300 W to deliver maximum computational speed and data throughput.

Unprecedented Memory and Bandwidth

A standout feature of the A100 80GB model is its significant memory capacity and bandwidth. It is equipped with 80GB of High Bandwidth Memory (HBM2e), a substantial increase that allows it to handle larger models and massive datasets more effectively. This is complemented by a peak memory bandwidth of up to 1.94 terabytes per second (TB/s), which is the world's highest for a PCIe card. This combination dramatically reduces the time to solution for complex problems by minimizing data transfer bottlenecks and allowing more data to be held closer to the processing cores.

Architectural Innovations: MIG and NVLink

The A100 incorporates two key architectural technologies to maximize utility and performance. The Multi-Instance GPU (MIG) feature allows a single A100 GPU to be partitioned into as many as seven smaller, fully isolated GPU instances. Each instance has its own dedicated memory, cache, and compute cores, enabling data centers to dynamically adjust to varying workload sizes and providing optimized quality of service (QoS). For scaling performance across multiple GPUs, the A100 supports NVIDIA NVLink technology. By using three NVLink bridges to connect a pair of A100 cards, it can achieve a total bandwidth of 600 GB/s—ten times that of PCIe Gen4—to maximize application throughput for the largest computational workloads.

Enhanced Security with Root of Trust

Security is a critical component of the A100's design. The GPU features a primary hardware root of trust integrated within the GPU chip, which enables secure boot, secure firmware upgrades, and firmware rollback protection. For enhanced security, specific models of the A100 80GB PCIe card include an onboard CEC1712 chip that acts as a secondary root of trust. This secondary chip extends security capabilities to include advanced features like firmware attestation, key revocation, and out-of-band firmware updates, providing a robust security foundation by verifying firmware integrity before the GPU is allowed to boot.

Physical and Environmental Specifications

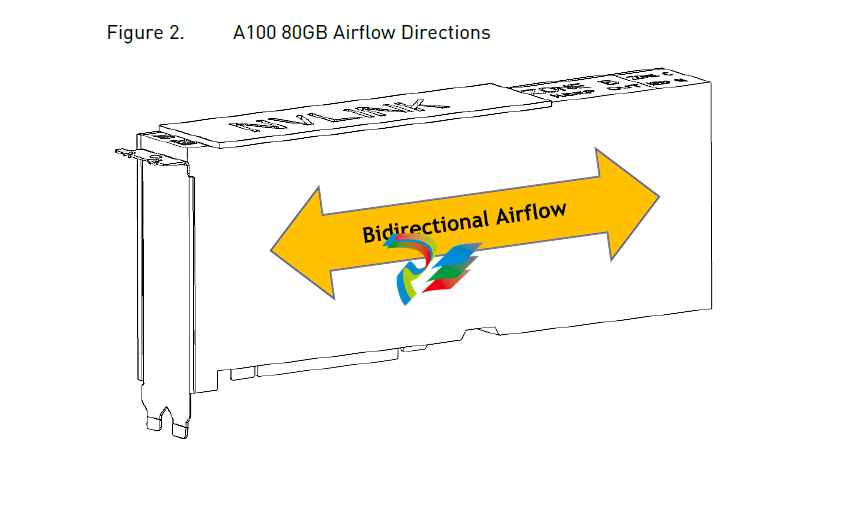

The A100 80GB PCIe card weighs approximately 1170 grams (board only) and conforms to a full-height, full-length (FHFL) dual-slot form factor. It requires a single CPU 8-pin auxiliary power connector. The card is designed to operate in ambient temperatures ranging from 0°C to 50°C, with a short-term tolerance between -5°C and 55°C. For software, it requires specific NVIDIA drivers (R470 or later) and supports CUDA 11.4 or later, along with various virtual GPU software solutions. The bidirectional heat sink design allows for flexible cooling, accepting airflow from either the left-to-right or right-to-left direction.

.png)

.png)

.png)