ABBIndustrial Networks Connecting Controllers via OPC

the 800xA for AC100 extension but can also be installed stand-alone [25].

All DSPs sent from AC160 are available in the AC100 OPC Server if not set

to be filtered out by the configuration tool. It is also possible by default to write

to these variables, however, if not configured otherwise the values written on the

OPC Server are not sent using planned DSPs but using event-based Service Data

Protocol (SDP) messages. As a consequence, all communication towards AC160

becomes very slow and is not even guaranteed to arrive. It is therefore necessary to

implement receiving DSPs in the AC160 and to configure the AC100 OPC Server

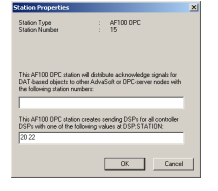

to send DSPs. The latter is done using the Bus Configuration Builder [26]. In the

properties dialog of the OPC station it is possible to specify the DSPs that have to

be sent [27]. Using this method, data transmission towards the AC160 becomes as

fast as reading from it

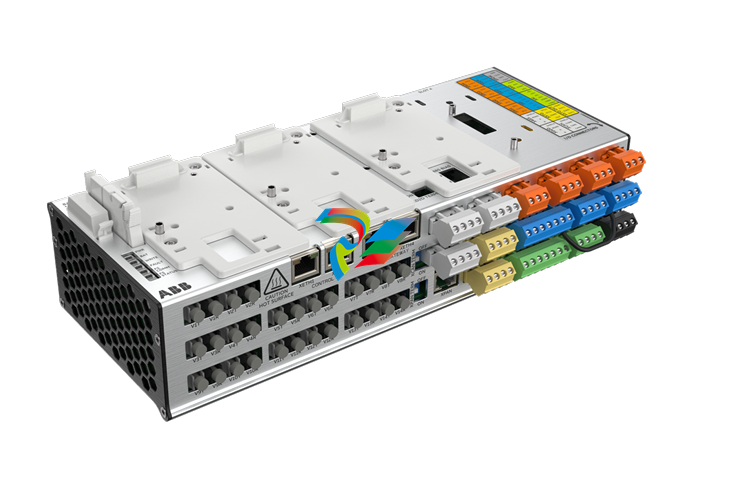

AC800M Side

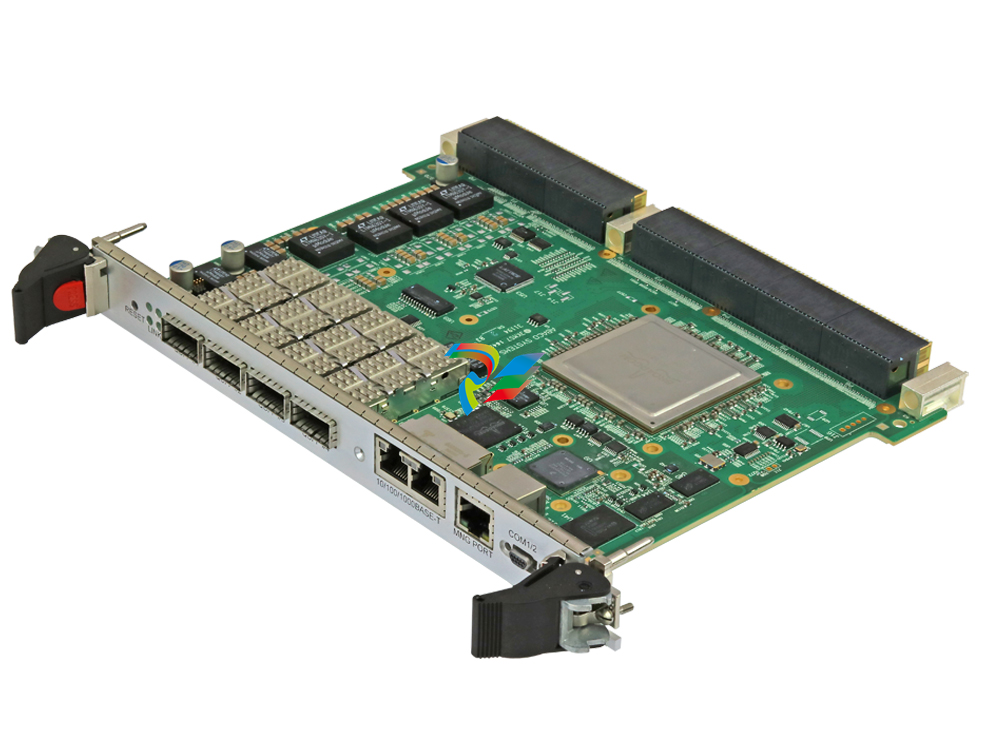

For all of our evaluations we connected the AC800M with our personal computer

using Ethernet. This is firstly necessary for programming and managing the controller. Secondly, we displayed the measured results using OPC AE and therefore

needed this connection in order to let the AC800M OPC Server communicate with

the controller. Last but not least we tested this connection for our purposes as

described in the following subsection.

3.3.1 Communication via MMS

MMS using TCP/IP over Ethernet is the standard communication between computer and controller, and since with AC800M OPC Server a ready-to-use connection

is provided, we included this approach in our evaluations. However, the performance

of this connection depends directly on the CPU load of the AC800M as well as on

the Ethernet network traffic [28].

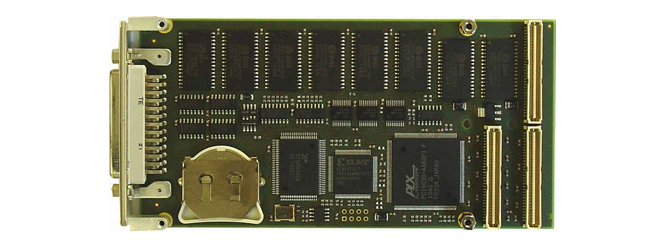

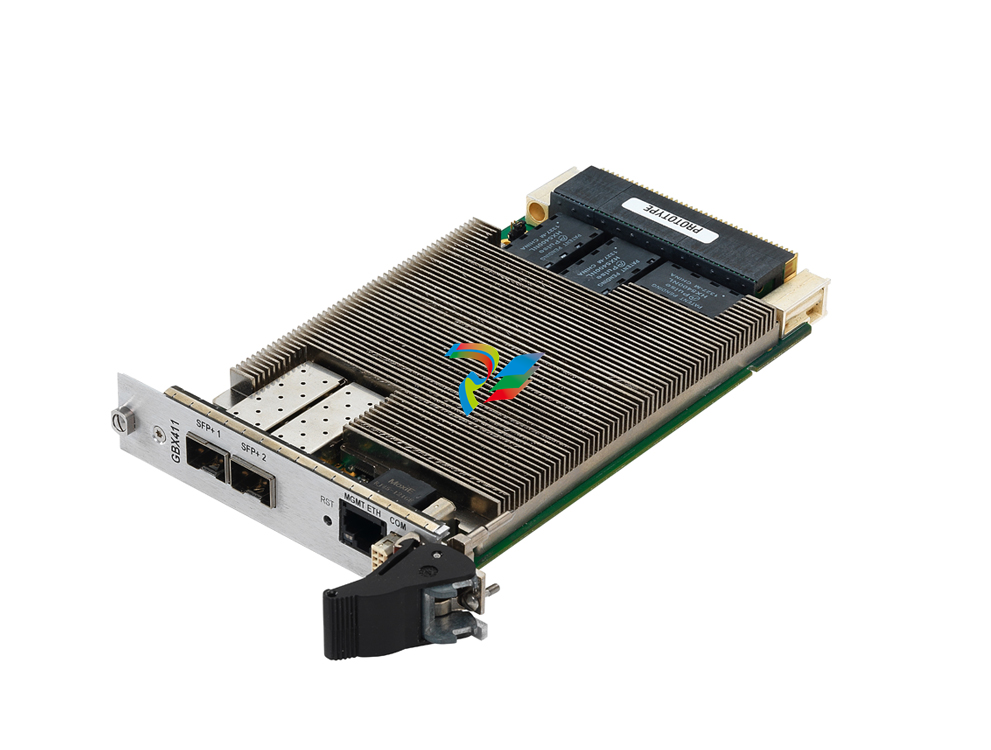

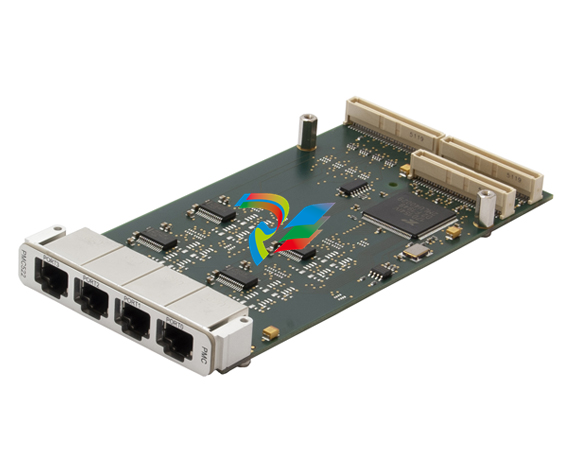

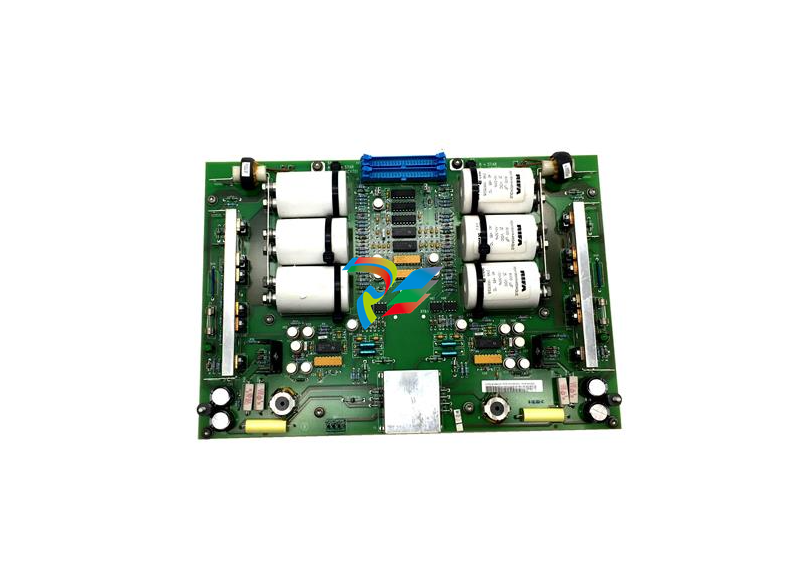

3.3.2 Communication via PROFIBUS

Due to the missing real-time behavior of a standard Ethernet link, it was planned

right from scratch to test this approach against a PROFIBUS connection. With the

CI854A, a sophisticated PROFIBUS communication interface for AC800M exists,

supporting 12 Mbit/s baud rate not depending on the CPU of the processor module.

This reasons and the fact that it enjoys a high acceptance in entire Europe made

PROFIBUS to our first choice of all fieldbus systems. Another promising bus,

FOUNDATION FIELDBUS High Speed Ethernet (FF HSE) was dismissed after

having recognized that the according communication interface CI860 has a very

poor throughput of only 500 32-bit values per second [17].

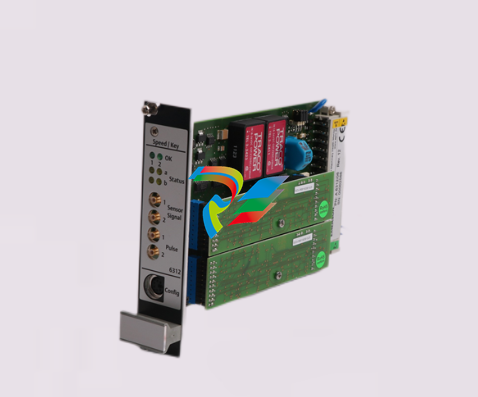

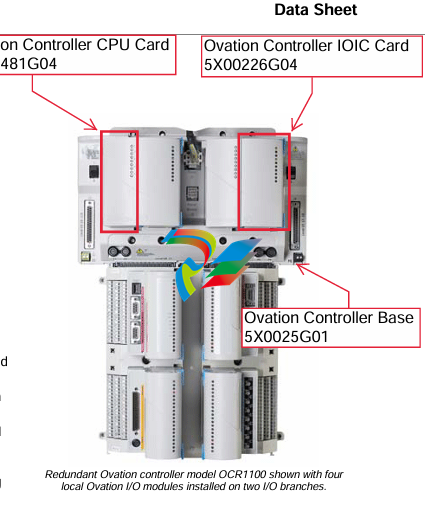

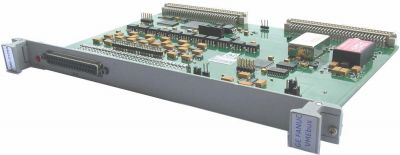

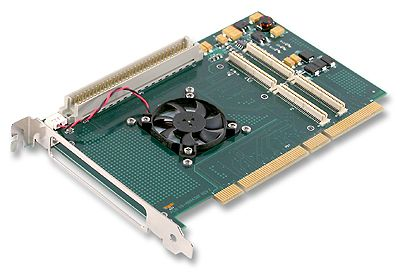

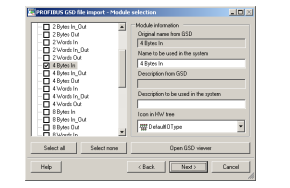

Figure 3.4: Module selection in Device Import Wizard

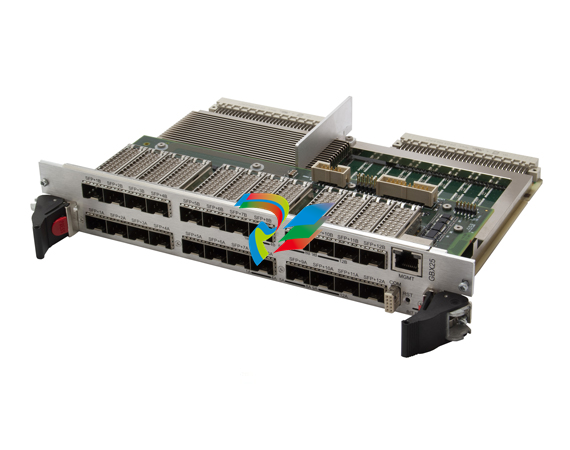

A typical use of PROFIBUS is to connect several slave devices such as sensors

with one or few masters (for example a controller) on one single bus (up to 126

devices). Often the amount of data per slave is very small, since it only represents

an input/output state or a measured value. Therefore it is rather exotic to use

PROFIBUS as a point-to-point communication with a big amount of data as we

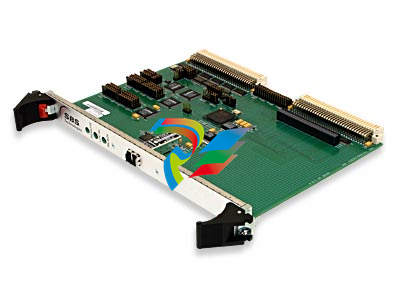

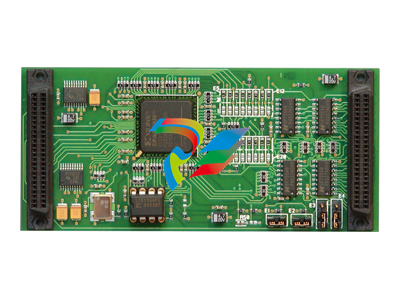

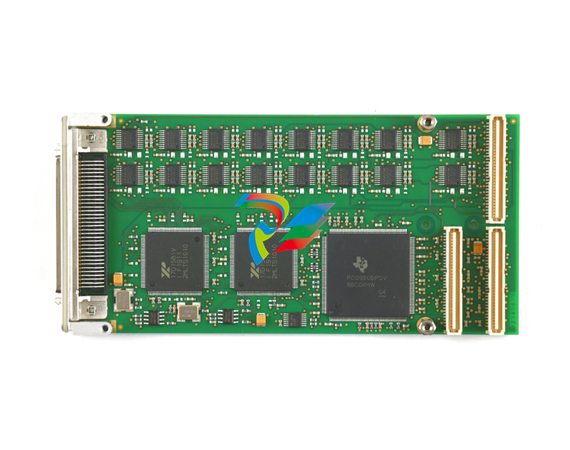

did in our case. Since the first test showed that the Beckhoff PROFIBUS solution

has performance problems, it was decided to test a second PCI card/OPC server

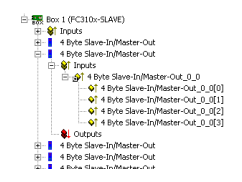

product from Woodhead Electronics. Both PROFIBUS cards were configured in a

way that one module consists of four 8-bit bytes to transport integers or two 16-bit

words to transport floats. These mappings were realized using the Device Import

Wizard in CBM and the PCI card’s configuration tools [29].

3.4 Bridging Software

A core part of our system is the OPC bridging software establishing the connection

between two OPC servers. Two different products were tested: OPC Data Manager

from Matrikon and LinkMaster from Kepware. Both products were evaluated due

to their specifications, bulk configuration possibilities (CSV files import/export)

and availability for testing.

There are different possibilities for connections in OPC Data Access. The standard method implemented in both programs is subscription, that is, the bridging

software subscribes as a client to the the source OPC server A to read from. The

source server then pushes changing values to the client at a fixed speed (10 milliseconds is the maximum for LinkMaster, 1 ms for ODM) which writes them to

the destination server. As a consequence, the load and therefore the performance

of the bridging software depends on the number of changes.

Since OPC DA is organized in subscription groups, the signal pairs in the bridging software were combined in groups of 10 to 32 signals with identical update rates

of 10 milliseconds. Both programs also support the (faster) processing of arrays instead of single values. However, we could not take advantage of this solution since

the OPC servers do not support arrays.

3.5 Resulting Test Systems

This section shows more detailed models of the resulting three test system setups

following the considerations above. The AC160 side (illustrated on the left hand

side) stays the same for all three setups. The models visualize one main challenge

of our task, namely the big quantity of different interfaces and each component

running with an own unsynchronized cycle time. While the models are not complete, they include all parts that are considered relevant in relation to our time

domain milliseconds. It is to be mentioned that the impact of BIOB and CEX on